Various Models for Connecting to

Databases via the IDatabase Interface

Efficiently Distributing Database Queries

Across One or More Servers

A web service application running on a web server is one of the most common use cases for RootDB with RQL when accessing databases. While a web server can operate on a single machine, applications requiring higher performance can distribute their database workloads across multiple computers within the web server's network.

Currently, RootDB database connections are primarily based on JDBC, allowing both localhost and remote connections to various database systems. However, JDBC is not mandatory for RootDB, as the IDatabase interface can be implemented using any communication channel supported by the underlying persistence storage system.

The only RootDB components that utilize JDBC are the IDatabase implementations specifically designed for database products that support JDBC.

RQL queries are processed by splitting them according to the scope of methods in the IDatabase interface. These methods operate at a lower level than RQL and execute queries without awareness of objects, ensuring efficient query resolution.

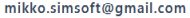

Using Localhost Databases for

Applications on a Single Computer

When a web server and its databases run on a single computer, JDBC-enabled databases are accessed via a JDBC localhost connection. The image below illustrates this setup, along with an alternative approach using the Xodus database, which operates without a predefined schema and allows direct access.

The Role of IDatabase in Query Execution and Performance

The implementation of IDatabase plays a critical role in query execution, as it, together with the database product, handles the most time-consuming part of the process. In particular, database queries represent the heaviest workload, with IDatabase implementations frequently performing operations comparable to SQL queries. These queries generate result sets that must be filtered and processed record by record to produce the final output as defined by the respective IDatabase method.

In contrast, the RootDB components that manage objects and control IDatabase calls consume significantly less execution time. Their operations typically represent only a small fraction of the total query execution time, highlighting the importance of optimizing IDatabase implementations for performance efficiency.

Remote Database Execution for Improved Performance in RootDB

Since the database itself handles a significant portion of the execution time in RootDB's database searches, an effective way to distribute the workload is to move the database and its execution to a separate computer. This approach reduces the processing burden on the web server and improves overall system performance.

Implementing this solution is straightforward with JDBC, which enables remote database connections without requiring changes to the application logic. The details of this approach will be discussed next.

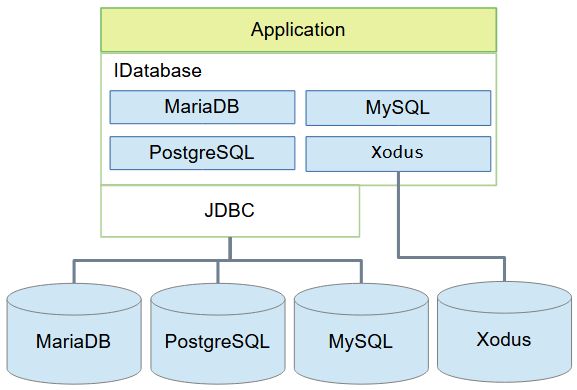

Accessing Databases Remotely Over

a TCP/IP Network

Remote Database Access via JDBC: Benefits and Performance Considerations

JDBC allows databases to be accessed both locally and remotely over a TCP/IP network with just a simple configuration change in the database connection settings. Offloading database execution to a dedicated machine optimized for database performance improves overall efficiency and increases throughput.

However, despite the advantages of a remote database server, the IDatabase implementation still runs on the web server, adding to its workload by executing database searches and processing query results to generate the final output as defined by the respective IDatabase method. Additionally, network communication over TCP/IP introduces some latency, partially reducing the performance gains of executing the database on a remote computer

As the most advanced option, a solution has been developed and implemented where the query methods of the IDatabase implementation are executed on a remote computer, fully relieving the web server from database query-related operations.

Distributing Database Usage with the

IDatabase Client-Server Model

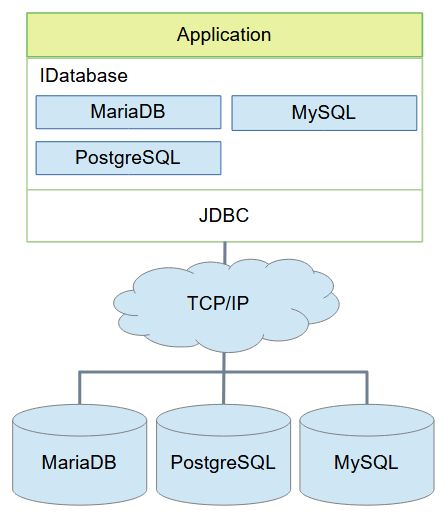

ProxyClient Forwards Database Queries to ProxyServer(s) for Execution

Splitting IDatabase Execution for Efficient Remote Database Access

The IDatabase implementation can be structured so that, on the web server side, it only collects method parameters, serializes them, and sends them over a TCP/IP connection to a remote database server for execution. This approach is particularly efficient for database queries since query definitions typically contain small amounts of data, making serialization lightweight and fast. Query results must also be serialized before being returned to the web server, but the impact on performance depends on the size of the result set. In most cases, only specific data or objects are retrieved, rather than large portions of the database, making distributed IDatabase query execution highly advantageous for improving web server performance.

However, IDatabase store operations (such as SQL insert and update methods) present a different challenge. Their parameters often include complex object graphs, leading to time-consuming serialization. Additionally, in real-world applications, bulk data writes—sometimes involving millions of objects—are common. To avoid performance degradation, store methods remain implemented on the web server, using a direct JDBC connection over TCP/IP to interact with the database server. This hybrid approach ensures that query execution is efficiently distributed, while high-volume data store operations remains optimized for direct database interaction.

Software Architecture for Distributed Database Execution

The software architecture is illustrated in the image above. On the web server side, the implementation consists of three key components: DatabaseClient, ProxyClient and IDatabase (X IDatabase) implementations for different database products.

- DatabaseClient serves as a wrapper for the IDatabase query methods, as their actual execution now takes place on the database server, where the database-specific implementations of IDatabase reside.

- Instead of executing queries directly, DatabaseClient simply collects the IDatabase query method parameters and uses the ProxyClient to transfer them to the database server for execution.

- X IDatabase refers to database product-specific implementations, as previously illustrated in "Accessing Databases Remotely Over a TCP/IP Network". These implementations enable JDBC connections to databases, whether accessed locally on the web server or remotely on another computer. The IDatabase implementations for MariaDB, MySQL, and PostgreSQL are exclusively designed to operate via JDBC connections.

Efficient Communication Between Web Server and Database Server

The communication between the web server and the database server is handled by ProxyClient and ProxyServer components. These implementations must support multiple concurrent threads, as RootDB queries rely heavily on parallel processing.

- In Java, the communication can be implemented using:

- Traditional socket-based client-server communication.

- Java NIO (Non-blocking I/O), which is more efficient for high-load systems.

- Java NIO avoids the need for one thread per connection and instead uses selectors to manage multiple connections efficiently.

- The current implementation uses sockets, but converting it to an NIO-based approach is straightforward.

Processing IDatabase Queries on the Database Server

On the database server side, ProxyServer receives IDatabase method calls and their parameters and forwards them to the corresponding database-specific IDatabase implementations.

- For example, MariaDB has its dedicated IDatabase implementation named MariaDBServer.

- When an IDatabase method is executed, its results are returned by ProxyServer to the client side.

- On the client side, the query results are relayed through ProxyClient and DatabaseClient back to RootDB, which originally initiated the IDatabase method call.

Object-Oriented Querying vs. Performance Optimization

Although RQL queries are expressed using object-oriented concepts, the queries sent from RootDB to IDatabase methods do not use objects, nor do the returned results.

- This is crucial for achieving maximum database performance, as object-based processing would introduce unnecessary overhead.

- Objects are only required in the communication between the application and the RootDB system, since the application’s object model is essential for executing its specific tasks.

Examples of Distributed Database Query Processing

Between the Web Server and Database Server

Significantly Reducing Web Server Workload

Through Database Query Distribution

A web server receives requests that must be processed as quickly as possible. In many cases, fulfilling these requests requires database queries, which can significantly impact server performance. When databases are hosted on the same web server, query execution consumes valuable computing resources, reducing the server's ability to efficiently handle incoming requests. Additionally, it is not just the database queries themselves but also the post-processing of result sets that can demand substantial processing power.

To optimize performance, it is beneficial to distribute database operations to dedicated database servers, which can be equipped with hardware specifically designed for high-performance query execution.

The examples below illustrate two real-world scenarios where database queries are executed over a local network—from a web server running Windows 10 to a database server on Linux. While both queries follow the same structure, the second database is approximately ten times larger, resulting in a significantly longer execution time.

These databases have been created with minimal indexing, meaning only ID fields are indexed (artificial indexing). While operating without indexes is uncommon in real-world applications, this setup helps simulate scenarios where database searches take an extended time to complete—a situation that can still occur in relational databases, even when indexing is in place.

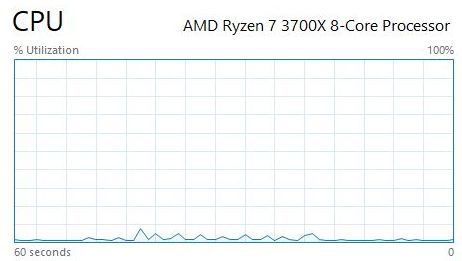

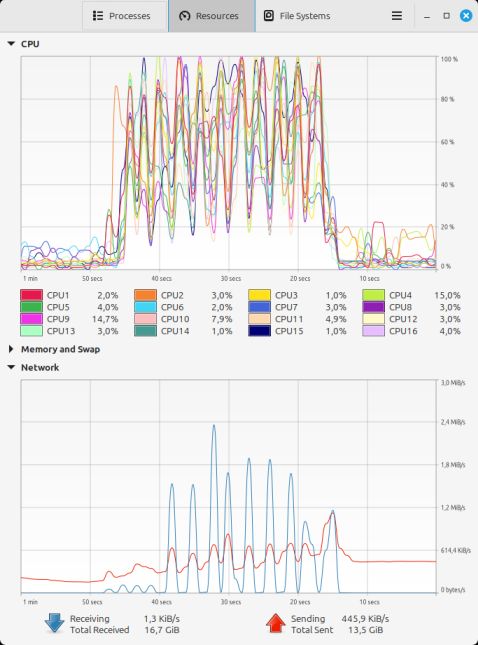

In the database tests, the workload of Windows 10 and Linux computers is illustrated through screenshots of their resource monitoring programs—Task Manager on Windows and System Monitor on Linux. Both computers have identical hardware, featuring an AMD Ryzen 7 3700X 8-Core Processor.

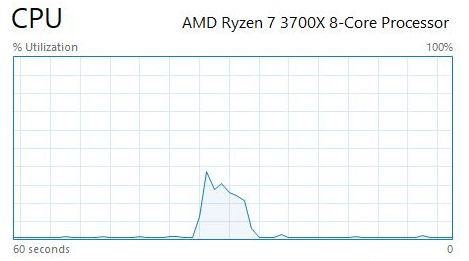

Executing a Database Query with an Approximate 10-Second Runtime

The first (topmost) Task Manager screenshot represents the scenario where the entire query execution occurs on the Windows computer. The peak CPU load reaches approximately 40%, while the average CPU load remains just above 20%.

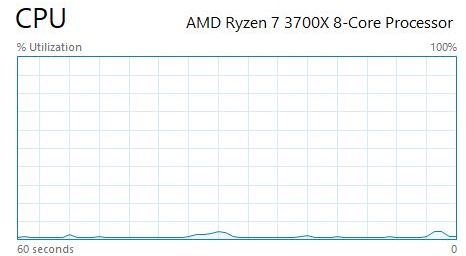

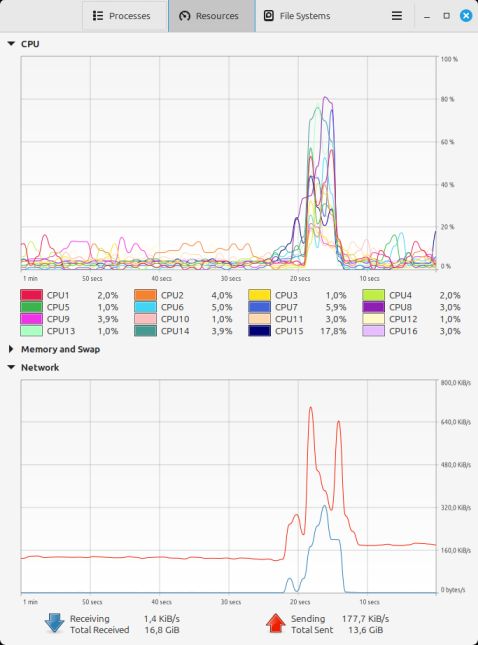

The second and third screenshots depict the client-server proxy model, where query execution is distributed between the web server and the database server. In this setup:

- The web server splits the query into sub-queries and further divides them into smaller tasks, optimized for parallel execution in the database server.

- The web server functions as a front-end, preparing queries into efficiently sized chunks for optimal execution.

- Although the web server plays a crucial role in controlling the query process, it consumes minimal CPU resources, which is evident in the CPU load image below.

This efficient workload distribution ensures that web server resources remain available for handling incoming requests, which is essential for maintaining high responsiveness and optimal performance.

A query test was conducted on a small object database running on a Windows 10 computer, with an execution time of approximately 10 seconds.

The execution of the same query test is distributed between a Windows 10 computer and a Linux computer with identical hardware. While the execution time remains nearly the same, the workload on the Windows 10 computer is reduced to an almost negligible level.

On the Linux computer, multiple threads operate intensively during the query test, handling both the computational load imposed by the database product (MariaDB) and the post-processing of record sets returned by the queries. Notably, the data is stored without any indexes that could accelerate query execution.

The received data (blue curve) consists of query definitions, while the sent data (red curve) represents the retrieved data identifying the objects found by the queries.

Executing a Database Query with an Approximate 35-Second Runtime

From the perspective of web server operation, the advantages of distributing database searches to one or more database servers become even more evident when performing long, resource-intensive queries or when multiple simultaneous searches place a heavy load on the database.

This test examines a database that is ten times larger than the previous one, with a structure that ensures the same search query retrieves ten times more objects in the result set. However, despite this increase in database size and result set, the execution time increases by only 3.5 times. This efficiency is achieved even though the database operates without any indexes for the queried data.

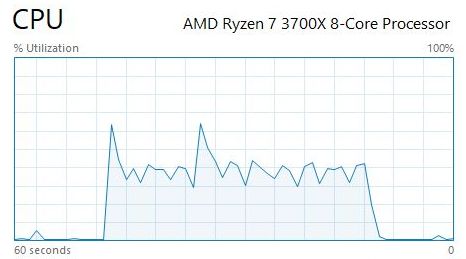

If the entire database search is executed locally on the web server, the query takes approximately 35 seconds, with an average CPU load of 40% and a peak load slightly exceeding 60%. The first screenshot below presents the CPU utilization graph from Windows Task Manager, demonstrating the significant strain placed on the web server’s resources, which would negatively impact its ability to handle multiple simultaneous requests.

The second and third screenshots illustrate the client-server proxy model, where query execution is distributed between the web server and the database server. This approach drastically reduces the workload on the Windows 10 web server, bringing it down to an almost negligible level. Meanwhile, on the Linux database server, multiple threads operate intensively, with many reaching near 100% CPU utilization during the query test. Despite this distribution of workload, the total execution time remains approximately the same as when the entire query runs on the Windows computer.

The Linux network traffic graphs further highlight the impact of a larger database. The received data (blue curve) now consists of narrow but high peaks, reflecting the fact that the query is divided into a greater number of smaller tasks, requiring a higher number of parallel threads for execution on the database server. The sent data (red curve) directly correlates with the tenfold increase in objects retrieved, further demonstrating the effectiveness of distributing query execution across a dedicated database server.

A query test was performed on a database ten times larger than in the previous test. When executed entirely on the Windows 10 computer, the query runtime increased to approximately 35 seconds.

The execution of the same query test is distributed between a Windows 10 computer and a Linux computer with identical hardware. The minimal CPU utilization on the Windows 10 computer clearly demonstrates the efficiency of distributing intensive database operations to a dedicated database server.

On the Linux computer, the significantly larger database results in a substantial increase in parallel query execution. A higher number of concurrent searches and result set post-processing tasks fully utilize the CPU's computational power, maximizing system performance.

The received data (blue curve) forms a pattern of multiple peaks, reflecting the increased number of queries executed in parallel. The sent data (red curve) mirrors these peaks at the same points, representing the retrieved data that identifies the objects found by the queries.

Summary

JDBC serves as the fundamental mechanism that database products use to establish connections, whether locally or remotely over a network. The highest data transfer performance is achieved when both the application and its database reside on the same computer. While local network data transfer is generally considered highly efficient, its impact on performance becomes notably negative when handling large volumes of data. This scenario is less common during standard database queries but occurs more frequently during database creation, where vast amounts of data must be written to the database.

When executing a query, the query execution may be complex, requiring the database engine to process and scan a large number of records. However, when indexing is sufficiently comprehensive for the queries, the final result set typically consists of a relatively small number of records. As a result, the volume of transferred data is often negligible compared to network bandwidth, which justifies using a direct JDBC connection as an efficient approach for remote database access.

However, if the result sets returned by the database require significant post-processing, the amount of data transferred between the database server and the application (web server) increases considerably. The post-processing load is especially high when the database lacks indexing or when indexing is insufficient to efficiently support common database operations.

The primary reason for avoiding or minimizing indexing is that indexes can introduce significant inefficiencies in data insertion and maintenance, making them impractical in certain scenarios.

The client-server proxy model provided by the RootDB system is an efficient approach to distribute query operations defined in the IDatabase interface to database servers. These servers can support application servers or web servers that require high-performance database services. Typically, a database server operates within the same local network as the computers utilizing its services. When deployed alongside a web server, this local network is commonly referred to as the Demilitarized Zone (DMZ).

The primary advantage of using the client-server proxy model instead of a direct JDBC connection is that it distributes the post-processing of query results to the remote database server. This is particularly beneficial when comprehensive indexing is not available, as database searches can produce large result sets requiring extensive post-processing. If this processing were performed on the computer initiating the queries, it would also introduce significant data transfer overhead.

Performance tests have demonstrated that approximately 95% of query execution time is spent inside IDatabase method implementations. By shifting this workload to a dedicated database server, the application server (or web server) significantly reduces its resource consumption, improving overall responsiveness and its ability to serve client requests efficiently.

Additionally, the client-server proxy model enables an application server or web server to connect to multiple database servers, each hosting multiple databases across different database products. This approach provides greater flexibility, scalability, and efficiency in distributing database services within a network.

Links to other pages on this site.

- RootDB and RQL, Home

- RootDB, Technical Features

Page content © 2025

Contact us: